This article is the second part of a three-part series. We will discuss how to render custom mock responses using APIM policy. Below are the different parts of this article series.

- Mocking Custom Responses with Azure API Management – Simple Mock Response

- Mocking Custom Responses with Azure API Management – Custom Mock Response

- Mocking Custom Responses with Azure API Management – Custom Mock Response from External Files

Custom mock responses

Often, we need more than just a 200 OK response without a body. Instead, we require comprehensive responses formatted as JSON messages. Adding to the complexity, the response often needs to vary based on specific query string parameters.

Here’s the approach I used to generate custom mock responses in Azure API Management based on varying query string parameters.

Navigate to your API Management instance and select the specific API operation you want to configure.

Click on the Inbound Processing section and open the Policy Code Editor.

We will modify the inbound section of the policy. We will add a policy segment to dynamically generate the response body based on a specified query string parameter.

Here is the code I used

<inbound>

<base />

<choose>

<when condition="@(context.Request.Url.Query.GetValueOrDefault("id") == "1")">

<return-response>

<set-status code="200" reason="OK" />

<set-header name="Content-Type" exists-action="override">

<value>application/json</value>

</set-header>

<set-body>{

"studentId": "1",

"name": "Jane Smith",

"grade": "B"

}</set-body>

</return-response>

</when>

<when condition="@(context.Request.Url.Query.GetValueOrDefault("id") == "2")">

<return-response>

<set-status code="200" reason="OK" />

<set-header name="Content-Type" exists-action="override">

<value>application/json</value>

</set-header>

<set-body>{

"studentId": "2",

"name": "Jane Smith",

"grade": "B",

}</set-body>

</return-response>

</when>

<otherwise>

<return-response>

<set-status code="404" reason="Not Found" />

<set-header name="Content-Type" exists-action="override">

<value>application/json</value>

</set-header>

<set-body>{

"error": "Student ID not found"

}</set-body>

</return-response>

</otherwise>

</choose>

</inbound>

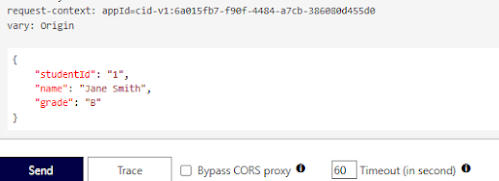

You can test the outcome by navigating to the Test console and specifying the appropriate query string parameter, as shown below.

Then, submit the request to verify that the appropriate response is returned based on the specified query string parameter.